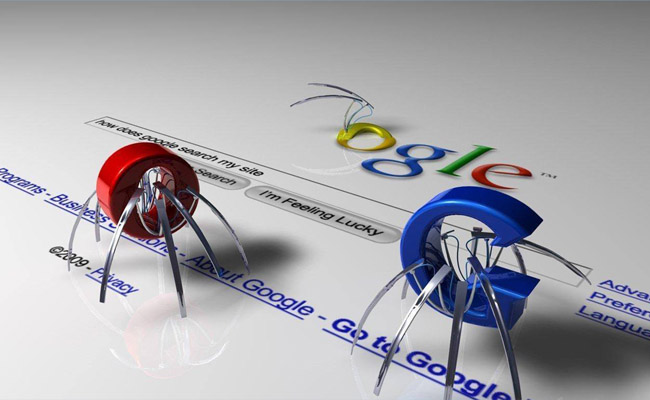

Site crawling is an major thing of SEO and if bots can’t crawl your site effectively,

you will notice many important pages are not indexed in Google or other search engines.

A site with proper navigation helps in deep crawling and indexing of your site. Especially,

for a news site it’s important that Search engine bots should be indexing your site within minutes of publishing and

that will happen when bots can crawl site as soon as possible you publish something.

Search engine bots follow links to crawl a new link and one easy way to get search engine bots index quickly,

get your site link on popular sites by commenting, guest posting.

1. Update your site Content Regularly

Content plays vital role and by far the most important criteria for search engines. Sites that update their content on a regular

basis are more likely to get crawled more frequently. You can provide fresh content through a blog that is on your site.

This is simpler than trying to add web pages or constantly changing your page content.

Static sites are crawled less often than those that provide new content.

2. Server with Good Up time

Host your blog on a reliable server with good uptime. Nobody wants Google bots to visit their blog during downtime. In fact, if your site is down for long, Google crawlers will set their crawling rate accordingly and you will find it harder to get your new content indexed faster.

3. Create Sitemaps

Sitemap submission is one of the first few things which you can do to make your site discover fast by search engine bots. In WordPress you can use Google XML sitemap plugin to generate dynamic sitemap and submit it to Webmaster tool.

4. Avoid Duplicate Content

Copied content decreases crawl rates. Search engines can easily pick up on duplicate content. This can result in less of your site being crawled. It can also result in the search engine banning your site or lowering your ranking. You should provide fresh and relevant content. Content can be anything from blog postings to videos. There are many ways to optimize your content for search engines.

5. Block access to the unwanted pages via Robots.txt

There is no point letting search engine bots crawling useless pages like admin pages, back-end folders as we don’t index them in Google and so there is no point letting them crawl such part of the site.